Summary:

- January 11th, 2026: Google UCP Launch: AI Agents Unlock $6T Retail Market

- January 12th, 2026: Anthropic Launches CoWork: 0-Code Apps for Enterprise

- January 13th, 2026: Microsoft’s $4B Community AI Plan: Heating Homes With Data

- January 14th, 2026: OpenAI Signs $10B Cerebras Deal: 15x Faster AI Agents

- January 14th, 2026: MedGemma 1.5 & MedASR: Google Opens The AI Hospital

Google UCP Launch: AI Agents Unlock $6T Retail Market

Date: Jan 11, 2026

Google launches Universal Commerce Protocol to standardise agentic shopping. See how partners like Shopify and Walmart are unlocking zero-click AI checkout.

Google has officially launched the Universal Commerce Protocol (UCP), an open standard designed to transform how consumers shop online by allowing AI agents to handle the entire purchasing journey. Announced on Jan 11, 2026, this strategic initiative aims to standardise "agentic commerce," enabling AI assistants to discover products, negotiate capabilities, and execute transactions directly on behalf of users. The launch features high-profile partnerships with major retailers and platforms, including Shopify, Walmart, Target, Wayfair, and Etsy, alongside payment giants Visa and Stripe.

This move represents a shift from traditional search-based traffic to a model where AI agents actively complete tasks. By integrating with UCP, brands can allow their inventory to be "machine-readable" and purchasable directly within Google AI Mode and Gemini, removing the need for users to click through to external websites. Unlike marketplace models, Google emphasises that merchants remain the "Merchant of Record," retaining full ownership of customer data and relationships while reducing friction and cart abandonment rates in the $6 trillion global e-commerce market.

Universal Commerce Protocol: Standardising AI Checkout

The core of this update is the establishment of a shared language between AI agents and merchant systems. UCP eliminates the need for bespoke integrations by providing a unified framework for product discovery, cart management, and secure payments. This allows a single AI agent to interface with thousands of different retailers seamlessly. The protocol is compatible with existing standards such as Agent2Agent and the Model Context Protocol, ensuring broad interoperability across the tech stack.

For businesses, the immediate application is "native checkout." Shoppers using Google Gemini or Search’s AI Mode can now complete purchases for eligible products without ever leaving the chat interface. Google has also introduced "Business Agent," a tool that allows brands to deploy AI sales associates directly in Search to answer queries and facilitate sales. By supporting tokenised payments and verifiable credentials, UCP ensures that these autonomous transactions remain secure while dramatically shortening the path from intent to purchase.

Strategic Pivot: Enabling The Agentic Economy

Google’s launch of UCP serves as a defensive and offensive play against competitors like OpenAI, who are also developing agentic commerce infrastructure. By creating an open standard, Google aims to prevent a fragmented ecosystem and ensure it remains the central hub for digital commerce as user behaviour shifts toward conversational AI. The protocol turns "intent" into the new unit of value, moving beyond the traditional cost-per-click model.

Looking ahead, Google has outlined a roadmap to expand UCP capabilities and deepen the functionality of agent-led shopping:

- Support for multi-item carts and complex bundles across different vendors.

- Account linking to integrate users' existing loyalty programs and rewards automatically.

- Post-purchase support features allowing agents to handle order tracking and returns.

- Direct Offers, a new ad format that triggers exclusive discounts at the precise moment of purchase intent.

- Global expansion of the protocol to markets outside the US throughout 2026.

Anthropic Launches CoWork: 0-Code Apps for Enterprise

Date: Jan 12, 2026

Anthropic unveils CoWork, a tool allowing non-coders to build functional software. The platform targets the $132B low-code market with 60% faster build times.

Anthropic has officially released CoWork, a new platform designed to let non-technical employees build fully functional internal tools using natural language. Launched on Jan 12, 2026, the tool leverages the advanced reasoning of Claude to bridge the gap between ideation and deployment, allowing users to generate dashboards, workflows, and databases without writing a single line of code. This release positions Anthropic directly against Microsoft and OpenAI in the race to dominate the $132 billion low-code/no-code market.

The platform aims to democratise software creation within large organisations. Early enterprise partners like Asana and Notion report that CoWork has reduced internal tool development time by 60%, enabling product managers and marketers to solve technical bottlenecks independently. By shifting the focus from "coding assistance" to "outcome generation," Anthropic is betting that the future of enterprise software lies in natural language interfaces rather than traditional integrated development environments.

CoWork Capabilities: Natural Language to Active Software

The core value of CoWork is its ability to function as an autonomous software engineer rather than just a code generator. Claude interprets conversational requests such as "build a tracker for Q1 inventory with a notification trigger" and instantly constructs the necessary backend logic and frontend UI. Unlike standard assistants that output text snippets for developers to implement, CoWork handles the hosting, security, and execution of the application entirely within the Anthropic ecosystem.

This "sandbox" environment supports real-time iteration. Users can modify their live applications by simply asking Claude to "add a filter by region" or "export data to CSV," with changes applied instantly. The system is built with enterprise-grade guardrails, ensuring that apps created by non-engineers comply with data privacy standards. Anthropic claims this reduces the "shadow IT" risk by keeping citizen-developed tools within a managed, visible infrastructure.

Enterprise Strategy: Scaling The Automated Workforce

Anthropic is positioning CoWork as a solution to the global developer shortage, allowing technical staff to focus on core product architecture while business teams handle operational tooling. The move underscores a shift in the AI sector from chatbots to actionable, productive agents that deliver tangible work products.

To support widespread adoption, Anthropic has outlined a roadmap focused on integration and governance:

- Rollout of Enterprise Admin Controls to manage permissioning, data access, and usage limits across generated apps.

- Upcoming API integrations with Salesforce and HubSpot to allow CoWork applications to pull and write live business data.

- Launch of a dedicated App Library in Q2 2026 where organisations can template and share successful internal tools.

- Expansion of CoWork availability to Team plan subscribers starting at $30 per user per month.

- Development of "multi-agent" collaboration where CoWork apps can trigger actions in other software autonomously.

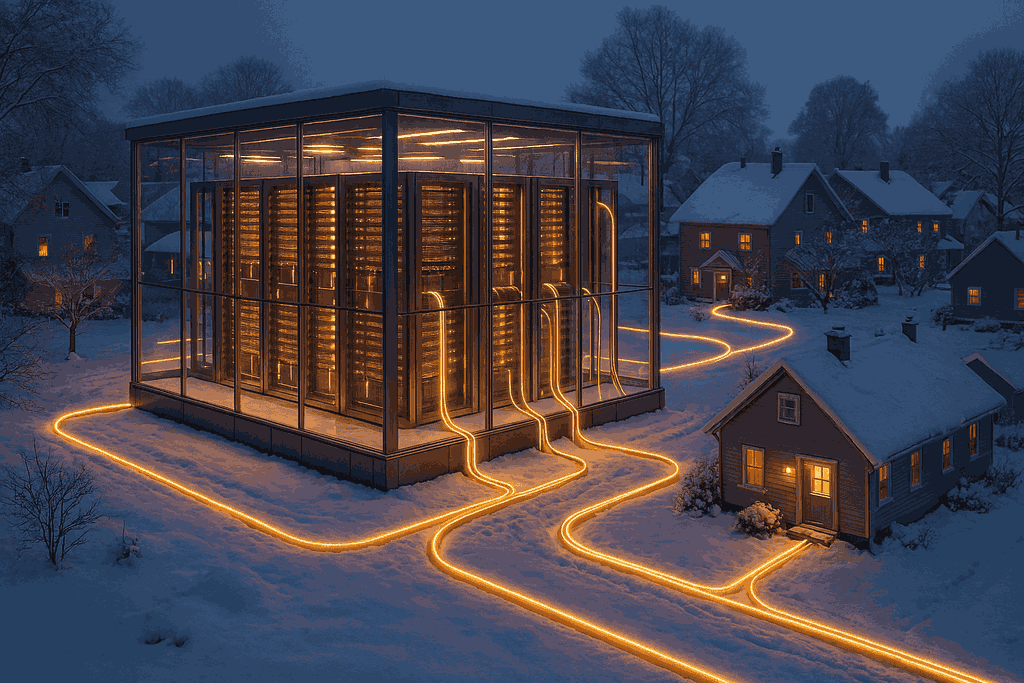

Microsoft’s $4B Community AI Plan: Heating Homes With Data

Date: Jan 13, 2026

Microsoft unveils the "Community-First AI Infrastructure" framework. The $4 billion initiative transforms data centers into community utility hubs.

Microsoft has formally announced the Community-First AI Infrastructure initiative, a $4 billion strategy designed to radically redesign how hyperscale data centers interact with local environments. Unveiled on Jan 13, 2026, by Vice Chair and President Brad Smith, the framework shifts focus from isolated industrial facilities to "symbiotic infrastructure" that directly benefits host municipalities. The plan addresses growing regulatory pushback and energy grid constraints by ensuring new AI facilities contribute tangible resources, such as residential heating and stabilised power grids, rather than merely consuming them.

The initiative launches with three pilot "Symbiotic Data Centers" planned for Wisconsin, Finland, and Quebec. Unlike traditional models, these facilities are engineered to capture 95% of server waste heat and channel it into local district heating systems, effectively subsidising energy costs for nearby residential areas. Microsoft has committed to a "Local Benefit Agreement" for all future builds exceeding 50 megawatts, mandating that infrastructure projects include legally binding contributions to local broadband expansion, water replenishment, and technical workforce training.

Symbiotic Design: Turning Waste Into Utility

The technical core of this update is the deployment of Microsoft's proprietary "Thermal Reuse Loop" technology. This system integrates directly with municipal utility pipes, converting the immense heat generated by NVIDIA and AMD AI accelerators into a saleable, low-cost commodity for local heating grids. By turning a waste product into a utility service, Microsoft projects it can lower local residential heating bills by up to 30% in participating districts while reducing the data center's own carbon footprint.

Beyond thermal exchange, the initiative introduces "Grid-Interactive Efficient Buildings" (GEBs). These facilities utilise massive onsite battery arrays to store renewable energy during peak production hours and release it back to the local grid during high-demand periods. This capability transforms the data center from a burden on the electrical infrastructure into a stabilising asset. The framework also allocates $500 million specifically for the "AI Skilling Corps," a program designed to train 100,000 local residents in fiber optics, cooling systems maintenance, and network security to ensure jobs remain within the community.

Operational Shift: Securing The License to Operate

Microsoft is positioning this framework as essential for the long-term viability of the AI industry. With energy consumption from AI projected to double by 2028, the company acknowledges that winning local support is now as critical as securing silicon. The Community-First model aims to preempt moratoriums on data center construction by proving that these facilities can function as public goods rather than extractive industries.

To ensure accountability and sustained progress, Microsoft has published a timeline of commitments tied to the new framework:

- Deployment of Thermal Reuse Loop technology in 20 global locations by Q4 2027.

- Achievement of Water Positive status in all new community-designated sites within 12 months of operation.

- Launch of a Community Dashboard providing real-time transparency on water usage, noise levels, and energy contributions.

- Integration with Azure to allow local governments to use onsite compute for civic planning at no cost.

- Expansion of the program to Asian and Latin American markets beginning in 2028.

OpenAI Signs $10B Cerebras Deal: 15x Faster AI Agents

Date: Jan 14, 2026

OpenAI secures 750MW of compute from Cerebras in a $10B+ bet on wafer-scale chips. The move aims to break NVIDIA's grip and power real-time agents.

OpenAI has signed a massive multi-year partnership with chip startup Cerebras Systems, valued at over $10 billion, to secure critical computing infrastructure for its next generation of AI models. Announced on Jan 14, 2026, the deal commits Cerebras to supplying 750 megawatts of dedicated compute capacity through 2028. This strategic pivot marks OpenAI's most significant effort to date to diversify its hardware supply chain beyond NVIDIA, addressing the severe global shortage of AI processors while targeting a dramatic reduction in inference latency for its 900 million weekly users.

The partnership focuses specifically on "inference"—the process of running live AI models rather than training them. OpenAI will utilise Cerebras' "wafer-scale" processors, which integrate memory and compute on a single giant silicon wafer, to power latency-sensitive workloads. OpenAI claims this architecture can deliver responses up to 15 times faster than traditional GPU clusters. This speed is viewed as essential for the upcoming wave of "agentic AI"—autonomous bots that require near-instantaneous reasoning to negotiate, code, or browse the web without user lag.

Wafer-Scale Compute: The "Inference" Engine

The deal validates Cerebras' unconventional approach to chip design. unlike standard chips that are cut from a silicon wafer, a Cerebras processor uses the entire wafer as a single unit, boasting 4 trillion transistors and eliminating the slow data transfer wires that typically connect separate chips. For OpenAI, this solves a critical bottleneck: bandwidth. By keeping massive models entirely on-chip, the system avoids the "memory wall" that slows down large language models (LLMs) on conventional hardware.

Under the agreement, deployment will occur in phases starting in mid-2026. The infrastructure will be leased rather than bought outright, allowing OpenAI to scale capacity dynamically as demand for its o1 and future GPT-5 class models surges. The deal also provides a lifeline for Cerebras, positioning it as a formidable "second source" in the AI hardware market as it prepares for a potential IPO, with reports suggesting the startup is currently seeking to raise an additional $1 billion at a $22 billion valuation.

Strategic Shift: Securing The Agentic Future

OpenAI's decision to back a non-GPU architecture signals a broader shift in the industry's "compute strategy." As AI models transition from "chatbots" to "active agents" that perform complex multi-step workflows, the cost and speed of inference have become the primary constraints. OpenAI is effectively betting that NVIDIA's dominance in training does not guarantee its dominance in inference, where low latency and cost-per-token are paramount.

To support this transition, OpenAI and Cerebras have outlined a deployment roadmap focused on real-time performance and scalability:

- Phased Rollout of 100MW tranches beginning in Q3 2026 to stress-test agent workloads.

- Dedicated "Agent Clusters" optimised specifically for long-context reasoning tasks (e.g., coding, legal analysis).

- API Price Reductions expected for enterprise developers due to lower operational costs of wafer-scale efficiency.

- Hybrid Routing software that automatically directs "fast" queries to Cerebras chips and "heavy" training jobs to NVIDIA clusters.

- Joint R&D on "sparse computing" techniques to run models 50% more efficiently by 2027.

MedGemma 1.5 & MedASR: Google Opens The AI Hospital

Date: Jan 14, 2026

Google Research releases MedGemma 1.5 and MedASR, bringing 3D scan analysis and clinical voice recognition to open source. The models cut transcription errors by 58% vs. OpenAI.

Google Research has significantly expanded its open-source medical AI suite with the dual launch of MedGemma 1.5 and MedASR. Announced on Jan 14, 2026, this update marks a critical shift from proprietary "black box" medical tools to accessible, locally runnable models that prioritise patient privacy. The release is headlined by MedGemma 1.5, a lightweight 4B parameter multimodal model now capable of interpreting complex 3D volumetric data—such as CT scans and MRIs—alongside standard 2D X-rays.

This "glass box" approach aims to standardise the infrastructure of digital health. By releasing these weights on Hugging Face and Vertex AI, Google is effectively giving every hospital and med-tech startup the building blocks for an autonomous AI radiologist and scribe without the licensing fees of enterprise alternatives. The launch is bolstered by a $100,000 "MedGemma Impact Challenge" on Kaggle, incentivising developers to build novel diagnostic workflows on top of this free infrastructure.

MedGemma 1.5: The Jump to 3D Vision

The defining upgrade in MedGemma 1.5 is its ability to "see" in three dimensions. While previous iterations were limited to flat images, the new architecture can process multi-slice inputs, allowing it to understand the depth and volume of an organ. This capability is critical for longitudinal analysis, where the model compares current scans against historical patient data to track disease progression over time. Internal benchmarks show a 14% accuracy jump in MRI classification compared to its predecessor.

Despite its complex capabilities, the model is optimised for efficiency. At just 4 billion parameters, MedGemma 1.5 is small enough to run on local hospital servers or even high-end edge devices , mitigating data residency concerns. This allows radiologists to use AI as a "second opinion" tool that lives entirely within the hospital's secure firewall, analysing histopathology slides and locating anatomical anomalies without patient data ever leaving the room.

MedASR: Breaking The Typing Bottleneck

Alongside the vision model, Google introduced MedASR, a specialised speech-to-text model designed to handle the rapid-fire, jargon-heavy nature of clinical dictation. Built on the Conformer architecture and trained on 5,000 hours of medical audio, MedASR addresses the high failure rate of general-purpose transcription tools in hospital settings.

Data shows MedASR achieves a 5.2% Word Error Rate (WER) on chest X-ray dictations—58% lower than OpenAI’s Whisper v3. This precision is vital for "ambient documentation," where AI listens to doctor-patient conversations to auto-fill Electronic Health Records (EHRs). Google envisions a seamless pipeline where MedASR transcribes a doctor's verbal request ("Show me the lesion on the L4 vertebrae"), which is then instantly executed by MedGemma 1.5.

Future Roadmap & Integration:

- Direct-to-Action: Future updates will link MedASR directly to hospital ordering systems for voice-activated prescriptions.

- Multimodal Pipelines: Tutorials released for chaining MedASR (Voice) -> Text -> MedGemma (Visual Analysis) for hands-free diagnostics.

- Global Expansion: Support for non-English medical terminology planned for Q3 2026.

- Device Integration: Optimisation for running MedASR directly on smart badges and clinical tablets.

.png)